Importance of crawling in seo

In the digital era, where online visibility is crucial for businesses and websites, understanding the technical processes behind search engines is vital. One of the most important processes in SEO is “crawling.” Crawling is the foundational step that search engines take to index and rank websites. Without it, your website might remain invisible to the vast majority of users searching for content.

This blog dives deep into the concept of crawling, its importance, how it works, and why businesses must prioritize it for effective SEO strategies.

Table of Contents

What is Crawling?

Explaining How crawling works.

At its core, crawling is the process by which search engines, like Google, systematically browse the web to discover and update content for their indexes. When a search engine “crawls” your website, it sends automated bots (often called crawlers or spiders) to visit and analyze your pages. These crawlers read the content, follow internal and external links, and capture the data to index it.

In simpler terms, crawling is how search engines map out the internet. It’s how they gather information about various websites and determine what to show when a user types in a search query.

How Do Crawlers Work?

Crawlers begin their journey by fetching a list of URLs from the search engine’s index. They start with a known set of web addresses (called seeds) and use links found on these pages to discover other URLs. As they visit each page, crawlers analyze the content, the structure of the website, and the links. This process continues in a loop as crawlers follow links to gather as much information as possible.

Key elements that crawlers focus on include:

- Website Structure: How your site is organized, including menus, internal links, and sitemaps.

- Meta Information: Titles, descriptions, and alt text on images, which provide more context for the content.

- Content Updates: Changes or additions to the text, images, or videos on your pages.

- Backlinks: Links pointing from other websites to yours, which signal authority and relevance.

- Outbound Links: Links from your site to others, helping crawlers understand the connectivity and references of your content.

Once crawlers have collected this data, it is stored in the search engine’s index, making it searchable and accessible to users.

Importance of Crawling for SEO

Impotance of crawling in seo

Crawling is crucial for search engines to recognize your website’s presence. If your site isn’t being crawled effectively, it can negatively impact your SEO. When a crawler misses pages or fails to fully understand your site’s content, it can lead to lower rankings and reduced visibility in search results.

For businesses, effective crawling translates into better SEO outcomes, including higher rankings, more organic traffic, and improved user experience. If search engines can easily crawl and index your pages, they can better match your site to user queries.

Factors That Affect Crawling Efficiency

Not all websites are crawled equally. There are several factors that influence how well search engines can crawl your site. Understanding and optimizing these factors can significantly enhance your SEO strategy.

Website Architecture

A well-structured website with clear hierarchies and an intuitive design allows crawlers to navigate through the site easily. Using a logical structure ensures that important pages are easily discoverable. Sitemaps also play a pivotal role in guiding crawlers, especially for large websites.

A disorganized or confusing website structure can lead to poor crawling. If crawlers can’t navigate through your website properly, important pages may remain uncrawled and invisible to search engines.

Crawl Budget

Each website has a limited “crawl budget,” which refers to the number of pages a search engine crawler will visit and analyze within a specific timeframe. Crawl budget is influenced by a website’s size, the speed of its server, and its overall importance to the search engine. Managing your crawl budget effectively is essential to ensure that all crucial pages are visited and indexed.

Larger websites, in particular, must prioritize their most important pages. If you waste your crawl budget on non-essential pages or duplicate content, vital sections of your site may remain uncrawled.

Server Performance

The performance of your web server directly impacts how well crawlers can access your site. Slow load times or frequent server errors can prevent crawlers from visiting certain pages. To improve crawl efficiency, it’s crucial to maintain a reliable server and minimize downtime.

Investing in a fast, stable hosting solution ensures that crawlers can access your content without facing technical difficulties. A well-performing server not only boosts your SEO but also enhances the overall user experience.

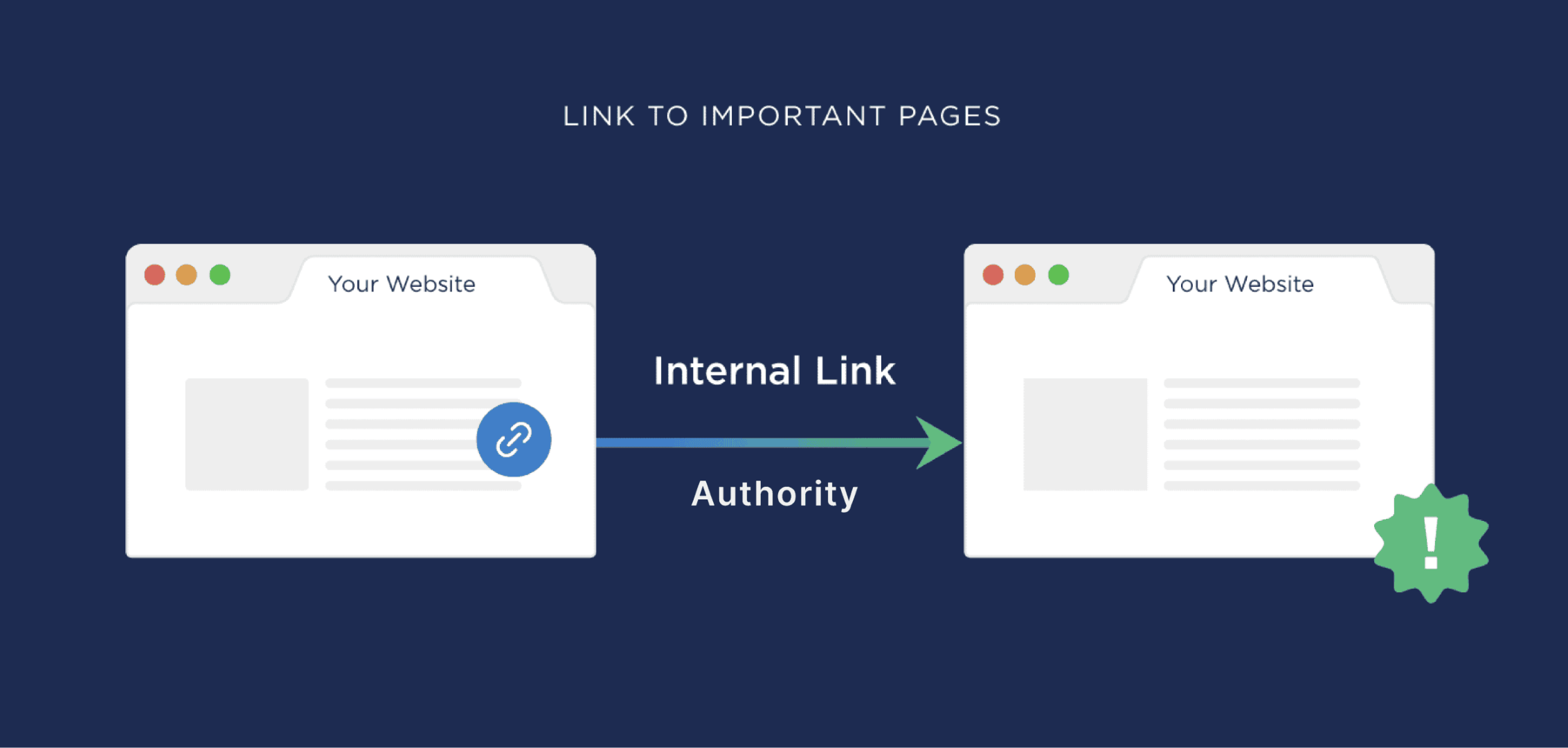

Internal Linking

Impotance of internal linking in crawling process.

Effective internal linking helps crawlers understand the relationships between different pages on your website. When you link your content logically, crawlers can follow these links to discover all the pages on your site. Well-placed internal links create a network of interconnected content, allowing crawlers to flow through your site smoothly.

On the other hand, poor internal linking or orphaned pages (pages with no inbound links) can hinder crawlers from fully indexing your site. It’s essential to maintain a strong internal linking structure to maximize crawl efficiency.

Duplicate Content

Crawlers are programmed to ignore duplicate content, which can waste your crawl budget. Pages with the same or very similar content confuse search engines, leading them to crawl fewer unique pages. To avoid this, ensure that each page on your website offers valuable and distinct content.

Canonical tags are an effective tool for preventing duplicate content issues, as they tell search engines which version of a page should be prioritized.

How to Make Sure Your Website Is Crawled Correctly

Given the importance of crawling, there are several steps you can take to make sure your website is crawled effectively. These actions can improve your site’s visibility in search engine results, leading to higher traffic and better overall SEO performance.

Create a Comprehensive Sitemap

A sitemap acts as a blueprint for your website, helping crawlers understand its structure and find all your pages. Submitting a sitemap to search engines ensures that your site is crawled more efficiently. Most Content Management Systems (CMS) automatically generate a sitemap, but >>you can also create one manually if needed.

Optimize for Mobile

With the increasing dominance of mobile search, it’s essential to have a mobile-optimized website. Search engines, especially Google, prioritize mobile-friendly websites. A mobile-responsive design ensures that crawlers can access your content seamlessly, regardless of the user’s device.

Improve Site Speed

As mentioned earlier, a slow website can prevent crawlers from indexing your pages efficiently. Enhancing your website’s speed improves both the user experience and the crawler’s ability to access content. Techniques like image compression, caching, and minimizing JavaScript can all contribute to faster load times.

Monitor Crawling with Google Search Console

Google Search Console is a powerful tool that helps you track how well your website is being crawled. It provides insights into crawl errors, pages that are not indexed, and other SEO issues. By regularly reviewing your Search Console data, you can address any problems that might be hindering your website’s crawlability.

Fix Crawl Errors

Crawl errors occur when a search engine bot tries to reach a page but fails to do so. These errors can be caused by broken links, server issues, or redirects. Fixing these errors is essential to ensure that all important pages are accessible to crawlers.

Google Search Console will notify you of any crawl errors, making it easier to address them and keep your website fully crawlable.

The Role of Robots.txt in Crawling

Explaining the role of Robot.txt in crawling process.

The robots.txt file is a critical component in controlling how search engines crawl your website. This file tells crawlers which pages or sections of your site they are allowed or disallowed to visit. By using robots.txt strategically, you can guide crawlers to prioritize important content and avoid unnecessary pages.

For instance, if there are areas of your website that you don’t want to be indexed (like internal data or private user information), you can block crawlers from accessing these pages via robots.txt. However, it’s important to use this tool carefully. Blocking essential pages from being crawled can severely harm your SEO.

Conclusion

Crawling is the lifeblood of search engine optimization, and without it, the entire process of indexing and ranking websites would be impossible. It serves as the essential first step in how search engines, such as Google, Bing, and others, discover new web pages, understand their content, and make informed decisions about how to display them to users. The success of your website in search engine rankings hinges on how well it is crawled, making crawling a critical component for online visibility and SEO performance.When search engine crawlers visit your site, they do more than just scan pages; they evaluate the relevance, quality, and structure of your content.

Therefore, ensuring that your website is structured for efficient crawling is crucial. Websites with strong architecture, clear internal linking, optimized meta tags, and minimal errors are more likely to be crawled thoroughly, indexed accurately, and ranked favorably. An efficiently crawled site not only helps search engines discover all of your important content but also ensures that your pages are indexed in a timely and correct manner. This ultimately improves your chances of appearing in relevant search results, driving more organic traffic to your site.However, it’s important to understand that crawling is not a one-time event. Websites evolve, and as you add new content, update existing pages, or make changes to your site’s structure,ensuring continued crawlability becomes an ongoing responsibility.

Author Name : Muhammed Arshad ch

Learner of DigiSkillz, Digital Marketing Institute in Kottakkal

Leave A Comment